In this tutorial, we implement an advanced Optuna workflow that systematically explores pruning, multi-objective optimization, custom callbacks, and rich visualization. Through each snippet, we see how Optuna helps us shape smarter search spaces, speed up experiments, and extract insights that guide model improvement. We work with real datasets, design efficient search strategies, and analyze trial behavior in a way that feels interactive, fast, and intuitive. Check out the FULL CODES here.

import optuna

from optuna.pruners import MedianPruner

from optuna.samplers import TPESampler

import numpy as np

from sklearn.datasets import load_breast_cancer, load_diabetes

from sklearn.model_selection import cross_val_score, KFold

from sklearn.ensemble import RandomForestClassifier, GradientBoostingClassifier

import matplotlib.pyplot as plt

def objective_with_pruning(trial):

X, y = load_breast_cancer(return_X_y=True)

params = {

'n_estimators': trial.suggest_int('n_estimators', 50, 200),

'min_samples_split': trial.suggest_int('min_samples_split', 2, 20),

'min_samples_leaf': trial.suggest_int('min_samples_leaf', 1, 10),

'subsample': trial.suggest_float('subsample', 0.6, 1.0),

'max_features': trial.suggest_categorical('max_features', ['sqrt', 'log2', None]),

}

model = GradientBoostingClassifier(**params, random_state=42)

kf = KFold(n_splits=3, shuffle=True, random_state=42)

scores = []

for fold, (train_idx, val_idx) in enumerate(kf.split(X)):

X_train, X_val = X[train_idx], X[val_idx]

y_train, y_val = y[train_idx], y[val_idx]

model.fit(X_train, y_train)

score = model.score(X_val, y_val)

scores.append(score)

trial.report(np.mean(scores), fold)

if trial.should_prune():

raise optuna.TrialPruned()

return np.mean(scores)

study1 = optuna.create_study(

direction='maximize',

sampler=TPESampler(seed=42),

pruner=MedianPruner(n_startup_trials=5, n_warmup_steps=1)

)

study1.optimize(objective_with_pruning, n_trials=30, show_progress_bar=True)

print(study1.best_value, study1.best_params)We set up all the core imports and define our first objective function with pruning. As we run the Gradient Boosting optimization, we observe Optuna actively pruning weaker trials and guiding us toward stronger hyperparameter regions. We feel the optimization becoming faster and more intelligent as the study progresses. Check out the FULL CODES here.

def multi_objective(trial):

X, y = load_breast_cancer(return_X_y=True)

n_estimators = trial.suggest_int('n_estimators', 10, 200)

max_depth = trial.suggest_int('max_depth', 2, 20)

min_samples_split = trial.suggest_int('min_samples_split', 2, 20)

model = RandomForestClassifier(

n_estimators=n_estimators,

max_depth=max_depth,

min_samples_split=min_samples_split,

random_state=42,

n_jobs=-1

)

accuracy = cross_val_score(model, X, y, cv=3, scoring='accuracy', n_jobs=-1).mean()

complexity = n_estimators * max_depth

return accuracy, complexity

study2 = optuna.create_study(

directions=['maximize', 'minimize'],

sampler=TPESampler(seed=42)

)

study2.optimize(multi_objective, n_trials=50, show_progress_bar=True)

for t in study2.best_trials[:3]:

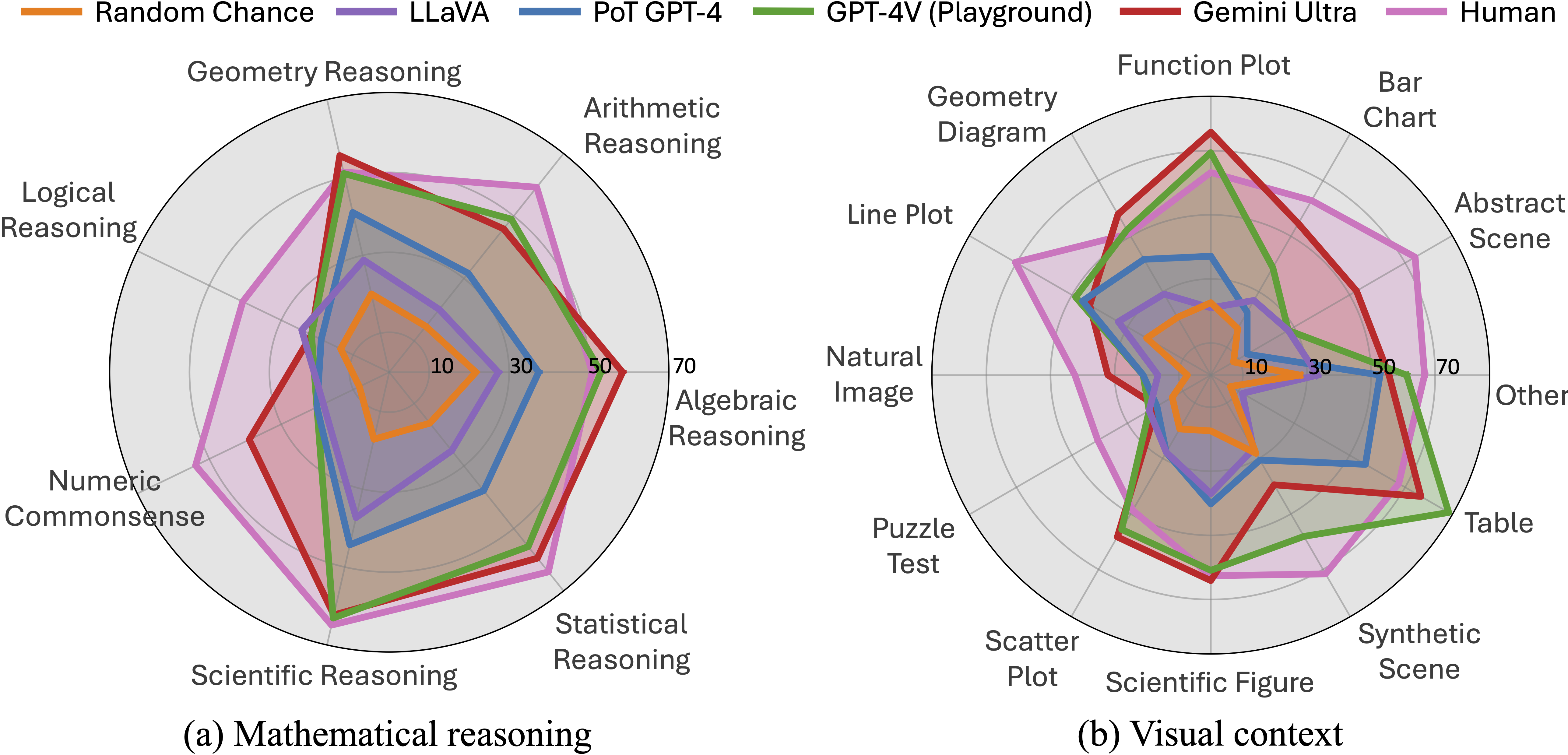

print(t.number, t.values)We shift to a multi-objective setup where we optimize both accuracy and model complexity. As we explore different configurations, we see how Optuna automatically builds a Pareto front, letting us compare trade-offs rather than chasing a single score. This provides us with a deeper understanding of how competing metrics interact with one another. Check out the FULL CODES here.

class EarlyStoppingCallback:

def __init__(self, early_stopping_rounds=10, direction='maximize'):

self.early_stopping_rounds = early_stopping_rounds

self.direction = direction

self.best_value = float('-inf') if direction == 'maximize' else float('inf')

self.counter = 0

def __call__(self, study, trial):

if trial.state != optuna.trial.TrialState.COMPLETE:

return

v = trial.value

if self.direction == 'maximize':

if v > self.best_value:

self.best_value, self.counter = v, 0

else:

self.counter += 1

else:

if v < self.best_value:

self.best_value, self.counter = v, 0

else:

self.counter += 1

if self.counter >= self.early_stopping_rounds:

study.stop()

def objective_regression(trial):

X, y = load_diabetes(return_X_y=True)

alpha = trial.suggest_float('alpha', 1e-3, 10.0, log=True)

max_iter = trial.suggest_int('max_iter', 100, 2000)

from sklearn.linear_model import Ridge

model = Ridge(alpha=alpha, max_iter=max_iter, random_state=42)

score = cross_val_score(model, X, y, cv=5, scoring='neg_mean_squared_error', n_jobs=-1).mean()

return -score

early_stopping = EarlyStoppingCallback(early_stopping_rounds=15, direction='minimize')

study3 = optuna.create_study(direction='minimize', sampler=TPESampler(seed=42))

study3.optimize(objective_regression, n_trials=100, callbacks=[early_stopping], show_progress_bar=True)

print(study3.best_value, study3.best_params)We introduce our own early-stopping callback and connect it to a regression objective. We observe how the study stops itself when progress stalls, saving time and compute. This makes us feel the power of customizing Optuna’s flow to match real-world training behavior. Check out the FULL CODES here.

fig, axes = plt.subplots(2, 2, figsize=(14, 10))

ax = axes[0, 0]

values = [t.value for t in study1.trials if t.value is not None]

ax.plot(values, marker="o", markersize=3)

ax.axhline(y=study1.best_value, color="r", linestyle="--")

ax.set_title('Study 1 History')

ax = axes[0, 1]

importance = optuna.importance.get_param_importances(study1)

params = list(importance.keys())[:5]

vals = [importance[p] for p in params]

ax.barh(params, vals)

ax.set_title('Param Importance')

ax = axes[1, 0]

for t in study2.trials:

if t.values:

ax.scatter(t.values[0], t.values[1], alpha=0.3)

for t in study2.best_trials:

ax.scatter(t.values[0], t.values[1], c="red", s=90)

ax.set_title('Pareto Front')

ax = axes[1, 1]

pairs = [(t.params.get('max_depth', 0), t.value) for t in study1.trials if t.value]

Xv, Yv = zip(*pairs) if pairs else ([], [])

ax.scatter(Xv, Yv, alpha=0.6)

ax.set_title('max_depth vs Accuracy')

plt.tight_layout()

plt.savefig('optuna_analysis.png', dpi=150)

plt.show()We visualize everything we have run so far. We generate optimization curves, parameter importances, Pareto fronts, and parameter-metric relationships, which help us interpret the entire experiment at a glance. As we examine the plots, we gain insight into where the model performs best and why. Check out the FULL CODES here.

p1 = len([t for t in study1.trials if t.state == optuna.trial.TrialState.PRUNED])

print("Study 1 Best Accuracy:", study1.best_value)

print("Study 1 Pruned %:", p1 / len(study1.trials) * 100)

print("Study 2 Pareto Solutions:", len(study2.best_trials))

print("Study 3 Best MSE:", study3.best_value)

print("Study 3 Trials:", len(study3.trials))We summarize key results from all three studies, reviewing accuracy, pruning efficiency, Pareto solutions, and regression MSE. Seeing everything condensed into a few lines gives us a clear sense of our optimization journey. We now feel confident in extending and adapting this setup for more advanced experiments.

In conclusion, we have gained an understanding of how to build powerful hyperparameter optimization pipelines that extend far beyond simple single-metric tuning. We combine pruning, Pareto optimization, early stopping, and analysis tools to form a complete and flexible workflow. We now feel confident in adapting this template for any future ML or DL model we want to optimize, knowing we now have a clear and practical blueprint for high-quality Optuna-based experimentation.

Check out the FULL CODES here. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.