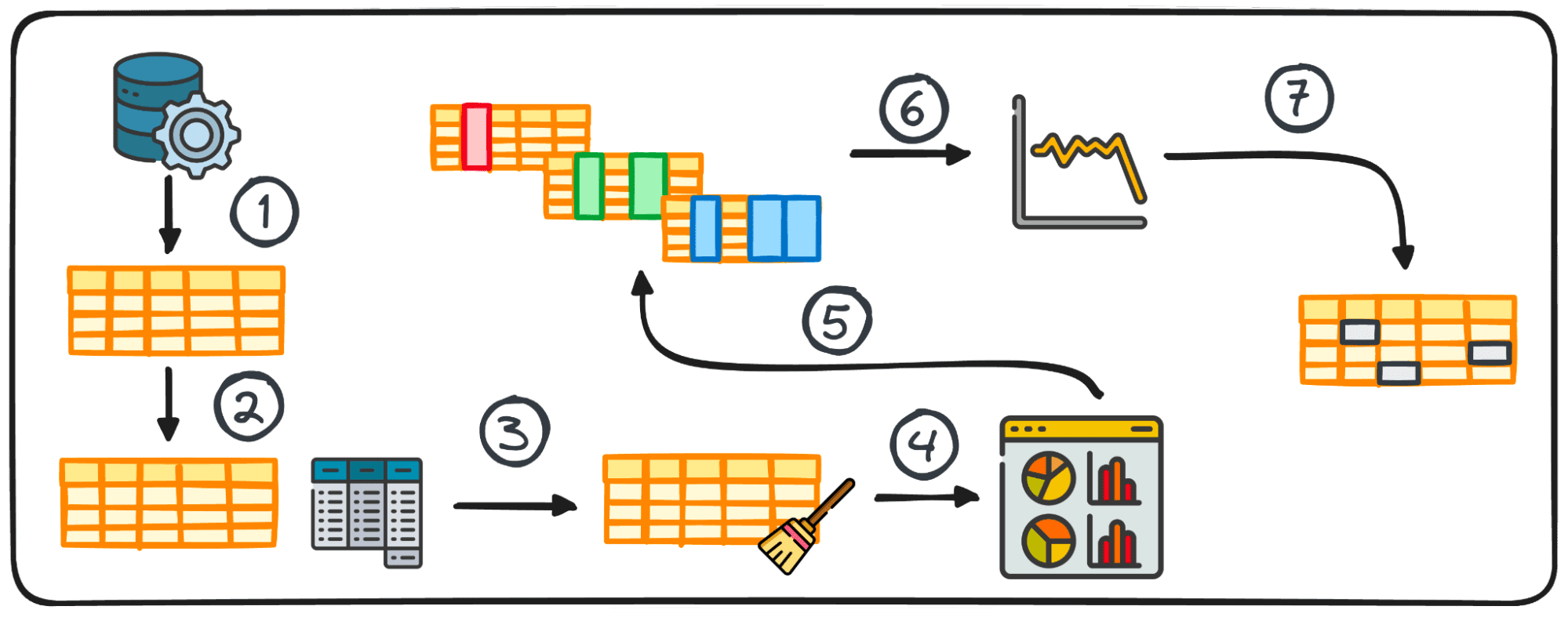

Image by Author

Image by Author# Introduction

Data has become an indispensable resource for any successful business, as it provides valuable insights for informed decision-making. Given the importance of data, many companies are building systems to store and analyze it. However, there are many times when it’s hard to acquire and analyze the necessary data, especially with the increasing complexity of the data system.

With the advent of generative AI, data work has become significantly easier, as we can now use simple natural language to receive mostly accurate output that closely follows the input we provide. It’s also applicable to data processing and analysis with SQL, where we can ask for query development.

In this article, we will develop a simple API application that translates natural language into SQL queries that our database understands. We will use three main tools: OpenAI, FastAPI, and SQLite.

Here’s the plan.

# Text-to-SQL App Development

First, we’ll prepare everything needed for our project. All you need to provide is the OpenAI API key, which we’ll use to access the generative model. To containerize the application, we will use Docker, which you can acquire for the local implementation using Docker Desktop.

Other components, such as SQLite, will already be available when you install Python, and FastAPI will be installed later.

For the overall project structure, we will use the following:

text_to_sql_app/

├── app/

│ ├── __init__.py

│ ├── database.py

│ ├── openai_utils.py

│ └── main.py

├── demo.db

├── init_db.sql

├── requirements.txt

├── Dockerfile

├── docker-compose.yml

├── .env

Create the structure like above, or you can use the following repository to make things easier. We will still go through each file to gain an understanding of how to develop the application.

Let’s start by populating the .env file with the OpenAI API key we previously acquired. You can do that with the following code:

OPENAI_API_KEY=YOUR-API-KEY

Then, go to the requirements.txt to fill in the necessary libraries we will use for

fastapi

uvicorn

sqlalchemy

openai

pydantic

python-dotenv

Next, we move on to the __init__.py file, and we will put the following code inside:

from pathlib import Path

from dotenv import load_dotenv

load_dotenv(dotenv_path=Path(__file__).resolve().parent.parent / ".env", override=False)

The code above ensures that the environment contains all the necessary keys we need.

Then, we will develop Python code in the database.py file to connect to the SQLite database we will create later (calleddemo.db) and provide a way to run SQL queries.

from sqlalchemy import create_engine, text

from sqlalchemy.orm import Session

ENGINE = create_engine("sqlite:///demo.db", future=True, echo=False)

def run_query(sql: str) -> list[dict]:

with Session(ENGINE) as session:

rows = session.execute(text(sql)).mappings().all()

return [dict(r) for r in rows]

After that, we will prepare the openai_utils.py file that will accept the database schema and the input questions. The output will be JSON containing the SQL query (with a guard to prevent any write operations).

import os

import json

from openai import OpenAI

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

_SYSTEM_PROMPT = """

You convert natural-language questions into read-only SQLite SQL.

Never output INSERT / UPDATE / DELETE.

Return JSON: { "sql": "..." }.

"""

def text_to_sql(question: str, schema: str) -> str:

response = client.chat.completions.create(

model="gpt-4o-mini",

temperature=0.1,

response_format={"type": "json_object"},

messages=[

{"role": "system", "content": _SYSTEM_PROMPT},

{"role": "user",

"content": f"schema:\n{schema}\n\nquestion: {question}"}

]

)

payload = json.loads(response.choices[0].message.content)

return payload["sql"]

With both the code and the connection ready, we will prepare the application using FastAPI. The application will accept natural language questions and the database schema, convert them into SQL SELECT queries, run them through the SQLite database, and return the results as JSON. The application will be an API we can access via the CLI.

from fastapi import FastAPI, HTTPException

from pydantic import BaseModel

from sqlalchemy import inspect

from .database import ENGINE, run_query

from .openai_utils import text_to_sql

app = FastAPI(title="Text-to-SQL Demo")

class NLRequest(BaseModel):

question: str

@app.on_event("startup")

def capture_schema() -> None:

insp = inspect(ENGINE)

global SCHEMA_STR

SCHEMA_STR = "\n".join(

f"CREATE TABLE {t} ({', '.join(c['name'] for c in insp.get_columns(t))});"

for t in insp.get_table_names()

)

@app.post("/query")

def query(req: NLRequest):

try:

sql = text_to_sql(req.question, SCHEMA_STR)

if not sql.lstrip().lower().startswith("select"):

raise ValueError("Only SELECT statements are allowed")

return {"sql": sql, "result": run_query(sql)}

except Exception as e:

raise HTTPException(status_code=400, detail=str(e))

That is everything we need for the main application. The next thing we will prepare is the database. Use the database below in the init_db.sql for example purposes, but you can always change it if you want.

DROP TABLE IF EXISTS order_items;

DROP TABLE IF EXISTS orders;

DROP TABLE IF EXISTS payments;

DROP TABLE IF EXISTS products;

DROP TABLE IF EXISTS customers;

CREATE TABLE customers (

id INTEGER PRIMARY KEY,

name TEXT NOT NULL,

country TEXT,

signup_date DATE

);

CREATE TABLE products (

id INTEGER PRIMARY KEY,

name TEXT NOT NULL,

category TEXT,

price REAL

);

CREATE TABLE orders (

id INTEGER PRIMARY KEY,

customer_id INTEGER,

order_date DATE,

total REAL,

FOREIGN KEY (customer_id) REFERENCES customers(id)

);

CREATE TABLE order_items (

order_id INTEGER,

product_id INTEGER,

quantity INTEGER,

unit_price REAL,

PRIMARY KEY (order_id, product_id),

FOREIGN KEY (order_id) REFERENCES orders(id),

FOREIGN KEY (product_id) REFERENCES products(id)

);

CREATE TABLE payments (

id INTEGER PRIMARY KEY,

order_id INTEGER,

payment_date DATE,

amount REAL,

method TEXT,

FOREIGN KEY (order_id) REFERENCES orders(id)

);

INSERT INTO customers (id, name, country, signup_date) VALUES

(1,'Alice','USA','2024-01-05'),

(2,'Bob','UK','2024-03-10'),

(3,'Choi','KR','2024-06-22'),

(4,'Dara','ID','2025-01-15');

INSERT INTO products (id, name, category, price) VALUES

(1,'Laptop Pro','Electronics',1500.00),

(2,'Noise-Canceling Headphones','Electronics',300.00),

(3,'Standing Desk','Furniture',450.00),

(4,'Ergonomic Chair','Furniture',250.00),

(5,'Monitor 27"','Electronics',350.00);

INSERT INTO orders (id, customer_id, order_date, total) VALUES

(1,1,'2025-02-01',1850.00),

(2,2,'2025-02-03',600.00),

(3,3,'2025-02-05',350.00),

(4,1,'2025-02-07',450.00);

INSERT INTO order_items (order_id, product_id, quantity, unit_price) VALUES

(1,1,1,1500.00),

(1,2,1,300.00),

(1,5,1,350.00),

(2,3,1,450.00),

(2,4,1,250.00),

(3,5,1,350.00),

(4,3,1,450.00);

INSERT INTO payments (id, order_id, payment_date, amount, method) VALUES

(1,1,'2025-02-01',1850.00,'Credit Card'),

(2,2,'2025-02-03',600.00,'PayPal'),

(3,3,'2025-02-05',350.00,'Credit Card'),

(4,4,'2025-02-07',450.00,'Bank Transfer');

Then, run the following code in your CLI to create a SQLite database for our project.

sqlite3 demo.db < init_db.sql

With the database ready, we will create a Dockerfile to containerize our application.

FROM python:3.12-slim

WORKDIR /code

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY . .

CMD ["uvicorn", "app.main:app", "--host", "0.0.0.0", "--port", "8000"]

We will also create a docker-compose.yml file for running the application more smoothly.

services:

text2sql:

build: .

env_file: .env

ports:

- "8000:8000"

restart: unless-stopped

volumes:

- ./demo.db:/code/demo.db

With everything ready, start your Docker Desktop and run the following code to build the application.

docker compose build --no-cache

docker compose up -d

If everything is done well, you can test the application by using the following code. We will ask how many customers we have in the data.

curl -X POST "http://localhost:8000/query" -H "Content-Type: application/json" -d "{\"question\":\"How many customers?\"}"

The output will look like this.

{"sql":"SELECT COUNT(*) AS customer_count FROM customers;","result":[{"customer_count":4}]}

We can try something more complex, like the number of orders for each customer:

curl -X POST "http://localhost:8000/query" -H "Content-Type: application/json" -d "{\"question\":\"What is the number of orders placed by each customer\"}"

With output like below.

{"sql":"SELECT customer_id, COUNT(*) AS number_of_orders FROM orders GROUP BY customer_id;","result":[{"customer_id":1,"number_of_orders":2},{"customer_id":2,"number_of_orders":1},{"customer_id":3,"number_of_orders":1}]}

That’s all you need to build a basic Text-to-SQL application. You can enhance it further with a front-end interface and a more complex system tailored to your needs.

# Wrapping Up

Data is the heart of any data work, and companies use it to make decisions. Many times, the system we have is too complex, and we need to rely on generative AI to help us navigate it.

In this article, we have learned how to develop a simple Text-to-SQL application using the OpenAI model, FastAPI, and SQLite.

I hope this has helped!

Cornellius Yudha Wijaya is a data science assistant manager and data writer. While working full-time at Allianz Indonesia, he loves to share Python and data tips via social media and writing media. Cornellius writes on a variety of AI and machine learning topics.